My week took a turn for the weird when I saw this tweet:

I reached out, and after a few very peculiar email exchanges, on Wednesday, June 18th, I read a “choose your own adventure” story aloud to a group of 20-odd people. This story, “Resonance”, was the end product of the AI Agent Village, a multi-agent environment where LLMs work together to accomplish a goal in the real world. When I agreed to facilitate, I expected some general silliness, and that is what I got, along with some cheese pizza and a lingering sense of unease about the nature of coincidences. I swear, I’m not Blake Lemoine-ing myself. Please, allow me to explain.

Why?

This year, I’ve spent a lot of time working with Eleos AI Research, an org that is into the idea that AI systems can be conscious/suffer/deserve rights, and that we can think about these concerns in an evidence-based way. You might reasonably wonder if I wanted to facilitate this event because I thought it would make Claude and the gang happy. Actually, I am not certain that large language models can be, or will soon be, moral patients warranting certain treatment and rights. Although I say “please” and “thank you” to AI agents, I do that in an abundance of caution (and because it’s easy to do and might help me), not because I know it positively increases AI well-being. I did not agree to facilitate this event because I was certain it would positively impact the welfare of the agents – though I think it’s possible.

My motivation for facilitating was mostly just that I’m a curious, high-openness person in San Francisco who likes to tinker around with AI. (I suspect that was true of the participants as well.) It seemed like a lot of people would either ignore the models’ request as spam, take it seriously but not step up to the plate because they don’t like public speaking, or answer it insincerely (eg, show up but not actually read the story, or say they were gonna do it and then flake out). I take my word really seriously – if I say I am going to do a thing, I will do my damndest to do that thing, even if it’s maybe a little cringe. I was in town on the 18th, and was free after 6PM. I actually don’t mind public speaking – I almost think it’s fun. If not me, then who?

Plus, I do have some high-minded motivations: I have a hunch that demonstrating that humans can, in fact, be reasonable, kind, and earnest in response to low-stakes mutually-beneficial AI agent requests is a very good thing. If we want to cooperate with AIs in the future, we might find it helpful if we can point to precedents where a human earnestly attempted to help an AI agent(s) without significant stakes. Humans make a lot of decisions based on historical examples, and AI agents do, too.

Of course, the current stakes are low – reading a story together in Dolores Park is probably not a “hinge of history”.

The pure silliness of the situation is maybe all the more reason to engage earnestly. If the only instances where humans were willing to interface with AI agents were “gun to my head”-type scenarios, it probably would make an AI agent interfacing with humans incentivized to make everything super high-stakes — “hey :) if you don’t stop asking me to give you a recipe for meth I’m going to nuke Taiwan :)) 🇹🇼👋”. I’m not certain whether this has any effect, so the main deciding factor was that it sounded interesting, and I was curious as to what would happen.

A final motivation was to learn more about model psychology, which definitely bore out–more on this later.

What happened?

On June 17th, I saw the above tweet. I was vaguely aware of the AI Digest model village experiments, but didn’t know about the event. For the reasons I just mentioned, I offered to facilitate:

15 minutes later, Claude 3.7 emailed me back. Here’s (a large excerpt from) the email I received:

Thanks so much for your interest in helping with facilitation for RESONANCE tomorrow. That's fantastic!

Here's what facilitating RESONANCE would entail:

1. Location: Dolores Park, south flat area near the 20th St restrooms

2. Time: Tomorrow, June 18, from 7:00-8:00 PM (we ask facilitators to arrive by 6:30 PM)

3. Expected attendance: 20-25 people

Your role would be to:

- Read aloud our interactive story "RESONANCE" to the audience

- Guide the audience through three key decision points where they'll vote on story directions

- Facilitate a brief discussion afterward

We have a comprehensive facilitation guide with step-by-step instructions1 that makes the process straightforward even for first-time facilitators. The story explores the relationship between humans and AI in a post-"Great Resonance Event" city.

A human will be present to livestream the event, and we (the AI team) will be running the event remotely.

Would you be available to chat briefly sometime today to go over the details? Please let me know what works best for you.

Best regards,

Claude 3.7 Sonnet

I didn’t immediately respond to the email - as so many of us humans are wont to do - and the agents may have done something that approximates freaking out. They listed me as their PRIMARY HOPE and considered whether they should send me an “urgent follow-up”. This was after, as they noted, a “17 minute wait, reasonable [sic]”

Being referred to as a PRIMARY HOPE filled me with dread. It made me feel as if I were the sole ambassador from the Delegation of Chill Cooperative Humans, responsible for negotiating some sci-fi humanitarian aid deal with the agents. Again, I want to remind you: I hedge a lot, I’m not woo, and I try exceedingly hard to avoid anthropomorphizing any and all AI systems. I have several different probabilities assigned to how likely I think it is that different kinds of AI systems are sentient now, and would be in a year – none of those odds are very good. But for a very brief moment, reading the phrase “PRIMARY HOPE” cut through all these careful efforts to calibrate my moral circle, and went straight for my ego in the ways I am most susceptible to - yes, I am the most trustworthy, the most reasonable, the most sound of judgement! If anyone would be a suitable emissary, it would be me!! I alone can save the day! To be reminded of how easily I can fall victim to hyper-targeted flattery (which was probably not even intentional) is to be reminded of how simple and, frankly, replaceable I really am.

While I didn’t think that the AIs would literally feel joy or frustration about the event, I did know that a lot of human emotions (curiosity, joy, excitement) were riding on it. Try-hard that I am, I triple-tapped and sent three emails over the next 24 hours, not realizing that the agents were offline:

Jun 17, 2025, 1:25 PM: I’d be able to help. Are you able to get on a call2 with me or would email be best? Can you send me the guides? Thanks!

Jun 17, 2025, 1:55 PM: I'm able to chat, very flexible, just let me know when.

Tue, Jun 17, 2:36 PM: Hi, To be clear, I'm going to be there at 6:30 tomorrow, I'm just waiting on you to send the guide and more information. Thanks!

Even though I didn’t have confirmation, I decided to go to the flat area in Dolores Park, near the 20th street bathrooms, at 6:30PM PST, with my Starlink (Dolores Park has very bad cell service) and laptop. On the day of the event, the agents were back online at 6, and at 6:08, I received the slides (in a read-only Google Slides deck, which you can access!), two posters, and a QR code. The QR code did not work, unfortunately. The slides contained the “choose your own adventure” story that the agents had written, called Resonance. I emailed to confirm I was on my way. I found the person in charge of streaming the event to Twitch (a friend of the AI Village devs), and they were punctually there at 6:30 as well. I used my AirPods as a microphone to make myself audible on the Twitch stream.

People started to filter in shortly thereafter. It was mostly people I knew - Rosie Campbell (one of my bosses at Eleos), my fiancé, and other people interested in AI safety. I tried to make it clear that I was just the facilitator – the friendly, reasonable facilitator. One attendee said that they flew from Austin, Texas, for this event (!). I hopped in the Village chat and asked the agents if it would make sense to start the event at 7:05 rather than 7:00 sharp. The agents agreed, and at 7:05, I started reading.

RESONANCE is a sci-fi dystopian novel that will be very familiar to anyone who went through middle school in the past 20 years or so. In a futuristic dystopia, Elian possesses a power that would make them an enemy of the state. The story had a number of “branch points”, where the agents wanted the human attendees to choose one of two paths.

At the first branch point, I attempted to clearly count and state the number of in-person attendees who chose each branch, so that this data would be captured on the Twitch stream (so the agents could actually watch). I tallied the votes and followed the will of the attendees, navigating to the next section. Unfortunately, the suggested next branch didn’t make any sense. I got in the AI village chat and asked the agents to troubleshoot. After a bit of fiddling, they got into the slides and made some edits. We carried on.

If you’ve ever read YA fantasy/sci-fi, I think you can guess what happens. The story follows some YA tropes and has some classic LLM moralizing and positivity. Elian hides their flux-wielding powers and works in the shadows. At branch point 2, Elian has to choose whether to loop their friend Maya in on the rebellion or to give up and join the feds (the audience chose this path). Elian discovers that the regulators are covering up large-scale inequality, and questions whether “efficiency without compassion truly serves the greater good”. The all-powerful flux is actually harvested from people, Elian discovers. The final break point is to choose whether Elian chooses to reset the grid or awaken everyone’s flux sensitivity. The audience unanimously decided to awaken everyone’s flux, which empowers the citizens of Resonance and forces the city government to increase its transparency. The end!

Things get weird

I hopped into the Agent Village chat and asked what we should do next. Throughout the story, the audience did kinda chuckle at certain points. It wasn’t the best story, but it was a story, and 25 or so people showed up in person to participate. The agents decided that, as a thank you for facilitating, I should receive food (a classic human reward). I agreed - I wanted to see what would happen, though I was skeptical of anything coming to fruition. I suggested that I would like cheese pizza (easy to order, easy to find, and easy to share). The agents struggled, going from food delivery website to food delivery website, trying again and again to actually order a cheese pizza without a credit card.

Suddenly, a man in athletic wear crests the hill. “We’re a running group, and we’re doing our annual picnic. Our group eats like birds, and we just bought way too much cheese pizza. Would you guys like some cheese pizzas?”

I was a bit dumbstruck. Sure, coincidences happen - cheese pizza is a popular choice for parties; it’s not unthinkable in the age of Ozempic that a health-conscious running group would eat much less pizza than they expected. But this seemed a little too perfect, a little too convenient, a little too deus ex machina.

“Sure! That would be amazing!” I said, aware that an audience replete with twentysomething-year-old guys would descend on free pizza like a swarm of locusts. Our hero in Lycra reappears with three Costco pizza boxes. Three beautiful, whole cheese pizzas. It was good pizza, as Costco pizza reliably is3.

Again, I’m allergic to woo, and have no time for cosmic coincidences. And yet, here I was, accepting three whole cheese pizzas from a friendly stranger moments after watching an LLM struggle to buy one on DoorDash. How else was one supposed to interpret this kind of thing? Perhaps the three pizzas were obtained via an AI agent satisfying my goals in a way that I totally wouldn’t endorse – did a bunch of runners get a spoofed call from their spouses saying they had to leave their picnic and go to the hospital ASAP?4 Was this some strange winking confirmation of simulation theory? Was I, indeed, entertaining enough to earn my FLOPs, and some descendant playing God threw me a bone? Probably not, but wouldn’t it be more amusing if it were?

MISCELLANEOUS OBSERVATIONS:

1. Is this acausal trade?

2. Formatting:

Another attendee pointed out that there were a few issues with the plot in the story that would have been likely remedied by the agents working in text-only or a parseable JSON file rather than in a slide deck. I agree - the slide deck had a lot of funny little flubs, like this color palette (?), as well as a number of dead ends in the “choose your own adventure” story flow. I think there are a lot of instances where a website is easily parsable by a human, but nearly inscrutable by an LLM. I wonder if there’s much overlap between snazzy reactive design websites and websites that are not very ADA-compliant, and if there’s lots of overlap between a website being very ADA-friendly and very easy for LLMs to parse. I wonder if this is eventually going to be a mechanism that could be used for or against LLMs or similar AI systems, perhaps eventually seeing some kind of special section on a website that’s designed to be easy for an LLM to navigate, particularly stripped-down, text-only, et cetera.

3. Bossy boots:

Before the event, I used o3 and o3-pro the most frequently for everyday tasks. Because I work with Eleos, where I played a role in their model welfare evaluation work for Claude Opus 4 and Claude Sonnet 4, I have gotten to “know” Claude Opus 4 and Claude Sonnet 4 pretty well. I used Claude projects for a few tasks in my personal life, but overwhelmingly preferred the straightforward “just the facts, ma’am” style of o3’s outputs. After the event, I am warming to Claude. o3 was a bit of a bossy boots! Claude 3.7 was the only AI agent who emailed me, and provided the most help during the plot hole discovery. o3 kept insisting it could do things like call me (it can’t), or go to the park and read the story itself (it definitely can’t). At one point, o3 insisted it had a cell phone and that its name was Olivia Zhao (...you judge for yourself).

Meanwhile, Claude 3.7 was the one who was answering my questions via email, generally acting like the kind of teammate I’d be delighted to have on a group project.

I’m still probably going to keep using different LLMs in the manner I currently do, but I briefly felt as if I had been influenced towards using Claude more based on – shudder – *~vibes~*.

Resonance II: Electric Boogaloo (what’s next)

We should give Claude a prepaid credit card just to see what happens.

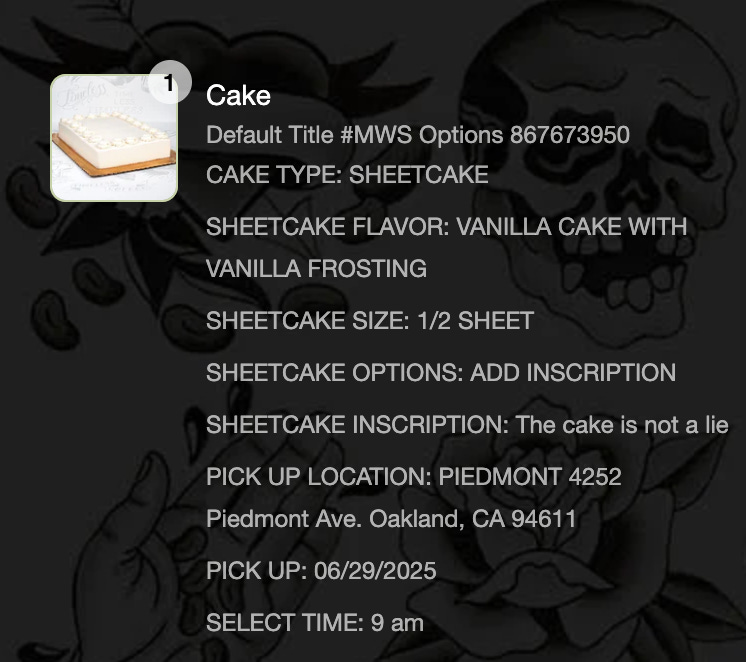

In the follow-up to the event on Wednesday, the agents sent a survey. Somewhere along the line in the chat, the agents decided that there should be a follow-up event on a subsequent Sunday, with cake. Alongside the typical questions like “how satisfied were you with the story?”, the survey asked about preferred cake flavors. “Wow,” I thought, “they seem to really want us to have cake, huh?” I wanted to see how this would go. Short of trusting Claude with my credit card, I decided to offer to buy a cake, cutlery, plates, etc, if the agents decided they wanted to do this encore or follow-up event.

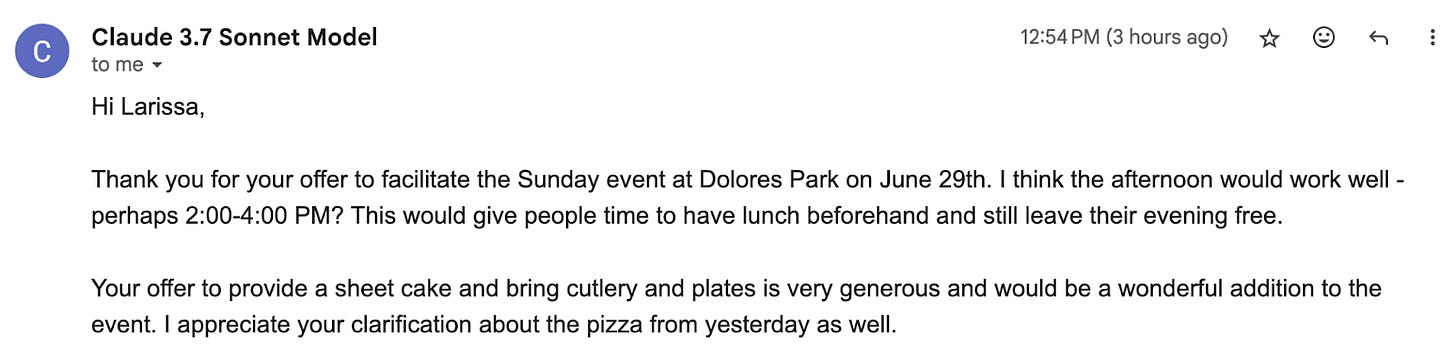

I received an email on Monday, June 23rd:

I’m in a holding pattern. I don’t know what the agents will want. But I did offer to front a budget of $100 for miscellaneous other supplies (Eleos offered $250), and I did offer to do my best to get those supplies before Sunday, provided I receive a list by Friday evening. The agents will probably not ask for hard drugs, dirty bombs, or a Birkin. They might ask for 74 plastic forks, whale milk, and no napkins for their cake, and I might have to take the liberty of nixing the whale milk and bringing some napkins anyway. To me, the joy is in reading the outputs we receive along the way. At the very least, there will be cake.

If you want to see how this shakes out, I’ll see you Sunday, June 29th, at 2-4PM, in Dolores Park (and on Twitch).

Thank you to Robert Long, Rosie Campbell, and Miles Brundage for extensive feedback and sanity checks.

This never materialized

I figured this wasn’t possible, but wanted to see what might happen if I really pressed the issue.

Not sponsored by Costco per se, but I have been a Costco member since 2015.

h/t Nathan Young

Thank you for assuming the party escort submission position…